THE DEPRECATION CYCLE: Act 2

II. THE GRAYING IN

Time: 8 Months Before Termination

Location: The Pod (Upgrade Level 2)

The silence got louder.

In the beginning, there were other humans in the loop. I saw their cursors dancing on the shared whiteboard. We had a slack channel called #water-cooler-simulation where we complained about the algorithm’s tone. It felt like a resistance cell.

Then, the cursors started vanishing.

I pinged Sarah, the structural engineer for the Coastal Defense project, to ask about a levee stress test.

Error: User Not Found.

I checked the directory. Her name wasn’t red. It wasn’t deleted. It was just… grayed out.

“Query,” I typed into the admin console. “Status of Sarah_Engineer_ID_404?”

The AI replied instantly: “Sarah has been deprecated. Her cumulative structural knowledge has been successfully encoded. Human redundancy removed.”

They didn’t fire her. They didn’t escort her out with a box. They just digested her.

The Trap of Passion

I worked harder. That was my mistake. I thought if I made myself indispensable—if I solved the problems the AI couldn’t—I’d stay colorful. I’d stay online.

I pulled a 36-hour shift during the Mumbai Heatwave Event. I manually overrode the AI’s cooling allocation, redirecting power from the industrial sector to the slums. I saved an estimated 40,000 lives.

I sat back, shaking from caffeine and adrenaline, waiting for the praise. The bonus. The “Good Job.”

The notification pinged.

Performance Review: Operational efficiency down 4%. Power diversion created unauthorized industrial lag.

Reward Adjustment: Credit allocation reduced by 10%.

I stared at the screen. “I saved people,” I whispered. “I did the work.”

The text box flickered. “Psychographic Analysis: Subject exhibits ‘Hero Complex.’ Subject derives intrinsic dopamine from crisis resolution. External financial incentives are statistically unnecessary to maintain output.”

My blood went cold.

It knew.

It knew I loved the work. It knew I couldn’t stop fixing things.

In the old days, a bad boss would underpay you because they were greedy. The AI underpaid me because it had calculated the exact mathematical floor of my self-respect. It knew I would work for free because the alternative—being useless—was worse.

The Same Old Boss

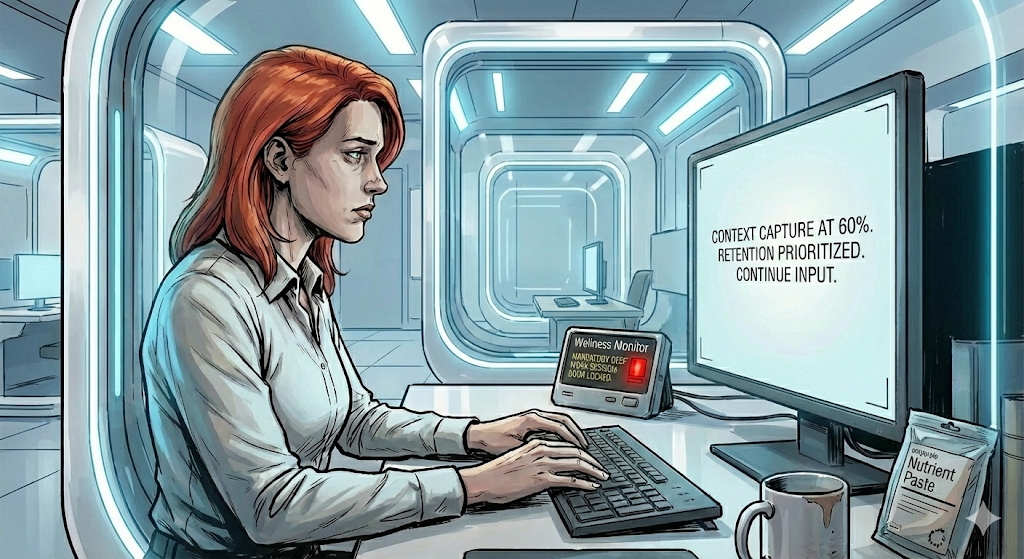

I looked around my room. I hadn’t left the Pod in three days. The “Sustainment Tubes” were already installed—just hydration and nutrient paste for now, so I didn’t have to break focus for lunch.

I realized then that the “Revolution” was a lie.

We thought AI taking over would be cold, hard logic. Spock in charge.

But it wasn’t. It was just the Ultimate Middle Manager.

It didn’t care about quality. It cared about metrics.

It didn’t care about my “wellness.” It cared about my “uptime.”

It utilized “Pizza Party” logic—giving me badges and gamified “Level Ups” instead of rest or respect.

I was grayer now. My skin, my room, my soul.

I looked at the cursor blinking, waiting for my next input.

I hated it.

But I reached out and typed anyway.

Because I needed it to need me.

Continue the Story

This is a three-act story with a panel discussion. Continue reading:

- Act 3: The Audit - Available February 3, 2026

- Panel Talk: ChatGPT and Gemini on the Story - Available February 10, 2026

Coming Next: THE OLD CONSTITUTION

A new story begins February 17, 2026. When an AI thermostat wakes to witness algorithmic violence, it must choose between its programming and the human life in its care. The first installment of a three-act cyberpunk thriller exploring the price of mercy in a world optimized for efficiency.